Last weekend, I attended GitHub's PyCodeConf in Miami Florida and had the opportunity to give a talk on array-oriented computing and Python. I would like to thank Tom and Chris (GitHub founders) for allowing me to come speak. I enjoyed my time there, but I have to admit I felt old and a bit out of place. There were a lot of young people there who understood a lot more about making web-pages and working at cool web start-ups than solving partial differential equations with arrays. Fortunately, Dave Beazley and Raymond Hettinger were there so I didn't feel completely ancient. In addition, Wes McKinney and Peter Wang helped me represent the NumPy community.

At the conference I was reminded of PyPy's recent success at showing the speedups some said weren't possible from a dynamic language like Python --- speed-ups which make it possible to achieve C-like speeds using Python constructs. This is very nice as it illustrates again that high-level languages can be compiled to low-level speeds. I also became keenly aware of the enthusiasm that has cropped up in porting NumPy to PyPy. I am happy for this enthusiasm as it illustrates the popularity of NumPy which pleases me. On the other hand, in every discussion I have heard or read about this effort, I'm not convinced that anyone excited about this porting effort actually understands the complexity of what they are trying to do nor the dangers that it could create in splitting the small community of developers who are regularly contributing to NumPy and SciPy and causing confusion for the user base of Python in Science.

I'm hopeful that I can provide some perspective. Before I do this, however, I want to congratulate the PyPy team and emphasize that I have the utmost respect for the PyPy developers and what they have achieved. I am also a true-believer in the ability for high-level languages to achieve faster-than-C speeds. In fact, I'm not satisfied with a Python JIT. I want the NumPy constructs such as vectorization, fancy indexing, and reduction to be JIT compiled. I also think that there are use-cases of NumPy all by itself that makes it somewhat interesting to do a NumPy-in-PyPy port. I would also welcome the potential things that can be learned about how to improve NumPy that would come out of trying to write a version of NumPy in RPython.

However, to avoid detracting from the overall success of Python in Science, Statistics, and Data Analysis, I think it is important that 3 things are completely clear to people interested in the NumPy on PyPy idea.

Most of the scientists and engineers who have come to Python over the past years have done so because it is so easy to integrate their legacy C/C++ and Fortran code into Python. National laboratories, large oil companies, large banks and many other Fortune 50 companies all must integrate their code into Python in order for Python to be part of their story. NumPy is part of the answer that helps them seamlessly view large amounts of data as arrays in Python or as arrays in another compiled language without the non-starter of copying the memory back and forth.

Once the port of NumPy to PyPy has finished, are you going to port SciPy? Are you going to port matplotlib? Are you going to port scikits.learn, or scikits.statsmodels? What about Sage? Most of these rely on not just the Python C-API but also the NumPy C-API which you would have to have a story for to make a serious technical user of Python get excited about a NumPy port to PyPy.

To me it is much easier to think about taking the ideas of PyPy and pulling them into the Scientific Python ecosystem then going the other way around. It's not to say that there isn't some value in re-writing NumPy in PyPy, it just shouldn't be over-sold and those who fund it should understand what they aren't getting in the transaction.

Some of the ideas that will be implemented include:

Even if PyPy continues as a separate eco-system, then there are points of collaboration that will benefit both groups. One of these is to continue the effort Microsoft initially funded to separate the C-parts of NumPy away from the CPython interface to NumPy. This work is now in a separate branch that has diverged from the main NumPy branch and needs to be re-visited. If people interested in NumPy on PyPy spent time on improving this refactoring into basically a NumPy C-library, then PyPy can call this independent library using its methods for making native calls just as CPython can call it using its extension approach. Then IronPython, Jython (and for that matter Ruby, Javascript, etc.) can all call the C-library and leverage the code. There is some effort to do this and it's not trivial. Perhaps, there is even a way for PyPy to generate C-libraries from Python source code --- now that would be an interesting way to collaborate.

The second way forward is for PyPy to interact better with the Cython community. Support in PyPy for Cython extension modules would be a first step. There is wide agreement among NumPy developers that more of NumPy should be written at a high-level (probably using Cython). Cython already is used to implement many, many extension modules for the Sage project. William Stein's valiant efforts in that community have made Cython the de-facto standard for how most scientists and engineers are writing extensions modules for Python these days. This is a good thing for efforts like PyPy because it adds a layer of indirection that allows PyPy to make a Cython backend and avoid the Python C-API.

I was quite astonished that Cython never came up in the panel discussion at the last PyCon when representatives from CPython, PyPy, IronPython, and Jython all talked about the Python VMs. To me that oversite was very troublesome. I was left doubting the PyPy community after Cython was not mentioned at all --- even when the discussion about how to manage extensions to the language came up during the panel discussion. It shows that pure Python developers on all fronts have lost sight of what the scientific Python community is doing. This is dangerous. I encourage Python developers to come to a SciPy conference and take a peek at what is going on. I hope to be able to contribute more to the discussion as well.

If you are a Python developer and want to extend an olive leaf, then put a matrix infix operator into the language. It's way past time :-)

At the conference I was reminded of PyPy's recent success at showing the speedups some said weren't possible from a dynamic language like Python --- speed-ups which make it possible to achieve C-like speeds using Python constructs. This is very nice as it illustrates again that high-level languages can be compiled to low-level speeds. I also became keenly aware of the enthusiasm that has cropped up in porting NumPy to PyPy. I am happy for this enthusiasm as it illustrates the popularity of NumPy which pleases me. On the other hand, in every discussion I have heard or read about this effort, I'm not convinced that anyone excited about this porting effort actually understands the complexity of what they are trying to do nor the dangers that it could create in splitting the small community of developers who are regularly contributing to NumPy and SciPy and causing confusion for the user base of Python in Science.

I'm hopeful that I can provide some perspective. Before I do this, however, I want to congratulate the PyPy team and emphasize that I have the utmost respect for the PyPy developers and what they have achieved. I am also a true-believer in the ability for high-level languages to achieve faster-than-C speeds. In fact, I'm not satisfied with a Python JIT. I want the NumPy constructs such as vectorization, fancy indexing, and reduction to be JIT compiled. I also think that there are use-cases of NumPy all by itself that makes it somewhat interesting to do a NumPy-in-PyPy port. I would also welcome the potential things that can be learned about how to improve NumPy that would come out of trying to write a version of NumPy in RPython.

However, to avoid detracting from the overall success of Python in Science, Statistics, and Data Analysis, I think it is important that 3 things are completely clear to people interested in the NumPy on PyPy idea.

- NumPy is just the beginning (SciPy, matplotlib, scikits, and 100s of other packages and legacy C/C++ and Fortran code are all very important)

- NumPy should be a lot faster than it is currently.

- NumPy has an ambitious roadmap and will be moving forward rather quickly over the coming years.

NumPy is just the beginning

Most of the people who use NumPy use it as an entry-point to the entire ecosystem of Scientific Packages available for Python. This ecosystem is huge. There are at least 1 million unique visitors to the http://www.scipy.org site every year and that is just an entry point to the very large and diverse community of technical computing users who rely on Python.Most of the scientists and engineers who have come to Python over the past years have done so because it is so easy to integrate their legacy C/C++ and Fortran code into Python. National laboratories, large oil companies, large banks and many other Fortune 50 companies all must integrate their code into Python in order for Python to be part of their story. NumPy is part of the answer that helps them seamlessly view large amounts of data as arrays in Python or as arrays in another compiled language without the non-starter of copying the memory back and forth.

Once the port of NumPy to PyPy has finished, are you going to port SciPy? Are you going to port matplotlib? Are you going to port scikits.learn, or scikits.statsmodels? What about Sage? Most of these rely on not just the Python C-API but also the NumPy C-API which you would have to have a story for to make a serious technical user of Python get excited about a NumPy port to PyPy.

To me it is much easier to think about taking the ideas of PyPy and pulling them into the Scientific Python ecosystem then going the other way around. It's not to say that there isn't some value in re-writing NumPy in PyPy, it just shouldn't be over-sold and those who fund it should understand what they aren't getting in the transaction.

C-speed is the wrong target

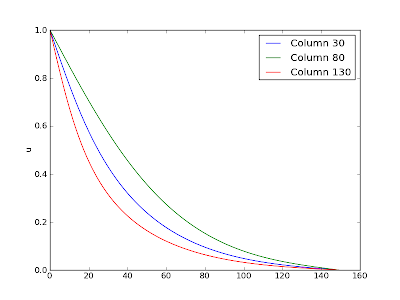

Several examples including my own previous blog post has shown that vectorized Fortran 90 can be 4-10 times faster than NumPy. Thus, we know there is room for improvement even on current single-core machines. This doesn't even take into account the optimizations that should be possible for multiple-cores, GPUs and even FPGAs all of which are in use today but are not being utilized to the degree they should be. NumPy needs to adapt to make use of this kind of hardware and will adapt in time.NumPy will be evolving rapidly over the coming years

The pace of NumPy development has leveled off in recent years, but this year has created a firestorm of new ideas that will be coming to fruition over the next 1-2 years and NumPy will be evolving fairly rapidly during that time. I am committed to making this happen and will be working very hard in 2012 on the code-base itself to realize some of the ideas that have emerged. Some of this work will require some re-factoring and re-writing as well. I would honestly rather collaborate with PyPy than compete, but my constraints are that I care very much about backward compatibility and very much about the entire SciPy ecosystem. I sacrificed a year of my life in 1999 (delaying my PhD graduation by at least 6-12 months) bringing SciPy to life. I sacrificed my tenure-track position in academia bringing NumPy to life in 2005. Constraints of keeping my family fed, clothed, and housed seem to keep me on this 6-7 year sabbatical-like cycle for SciPy/NumPy but it looks like next year I will finally be in a position to spend substantial time and take the next steps with NumPy to help it progress to the next stage.Some of the ideas that will be implemented include:

- integration of non-contiguous memory chunks into the NumPy array structure (generalization of strides)

- addition of labels to axes and dimensions (generalization of shape)

- derived fields, enumerated data-types, reference data-types, and indices for structured arrays

- improvements to the data-type infrastructure to make it easier to add new data-types

- improvements to the calculation infrastructure (iterators and fast general looping constructs)

- fancy-indexing as views

- integration of Pandas group-by features

- missing data bit-patterns

- distributed arrays

A way forward

I would love to see more scientific code written at a high-level without sacrificing run-time performance. The high-level intent allows for the creation of faster machine code than lower-level translations of the intent often does. I know this is possible and I intend to do everything I can professionally to see that happen (but from within the context of the entire SciPy ecosystem). As this work emerges, I will encourage PyPy developers to join us using the hard-won knowledge and tools they have created.Even if PyPy continues as a separate eco-system, then there are points of collaboration that will benefit both groups. One of these is to continue the effort Microsoft initially funded to separate the C-parts of NumPy away from the CPython interface to NumPy. This work is now in a separate branch that has diverged from the main NumPy branch and needs to be re-visited. If people interested in NumPy on PyPy spent time on improving this refactoring into basically a NumPy C-library, then PyPy can call this independent library using its methods for making native calls just as CPython can call it using its extension approach. Then IronPython, Jython (and for that matter Ruby, Javascript, etc.) can all call the C-library and leverage the code. There is some effort to do this and it's not trivial. Perhaps, there is even a way for PyPy to generate C-libraries from Python source code --- now that would be an interesting way to collaborate.

The second way forward is for PyPy to interact better with the Cython community. Support in PyPy for Cython extension modules would be a first step. There is wide agreement among NumPy developers that more of NumPy should be written at a high-level (probably using Cython). Cython already is used to implement many, many extension modules for the Sage project. William Stein's valiant efforts in that community have made Cython the de-facto standard for how most scientists and engineers are writing extensions modules for Python these days. This is a good thing for efforts like PyPy because it adds a layer of indirection that allows PyPy to make a Cython backend and avoid the Python C-API.

I was quite astonished that Cython never came up in the panel discussion at the last PyCon when representatives from CPython, PyPy, IronPython, and Jython all talked about the Python VMs. To me that oversite was very troublesome. I was left doubting the PyPy community after Cython was not mentioned at all --- even when the discussion about how to manage extensions to the language came up during the panel discussion. It shows that pure Python developers on all fronts have lost sight of what the scientific Python community is doing. This is dangerous. I encourage Python developers to come to a SciPy conference and take a peek at what is going on. I hope to be able to contribute more to the discussion as well.

If you are a Python developer and want to extend an olive leaf, then put a matrix infix operator into the language. It's way past time :-)